Chinmay Hegde

-

Associate Professor

Research News

NYU Tandon study exposes failings of measures to prevent illegal content generation by text-to-image AI models

Researchers at NYU Tandon School of Engineering have revealed critical shortcomings in recently proposed methods aimed at making powerful text-to-image generative AI systems safer for public use.

In a paper that will be presented at the Twelfth International Conference on Learning Representations (ICLR), taking place in Vienna from May 7-11, 2024, the research team demonstrates how techniques that claim to "erase" the ability of models like Stable Diffusion to generate explicit, copyrighted, or otherwise unsafe visual content can be circumvented through simple attacks.

Stable Diffusion is a publicly available AI system that can create highly realistic images from just text descriptions. Examples of the images generated in the study are on GitHub.

"Text-to-image models have taken the world by storm with their ability to create virtually any visual scene from just textual descriptions," said the paper’s lead author Chinmay Hegde, associate professor in the NYU Tandon Electrical and Computer Engineering Department and in the Computer Science and Engineering Department. "But that opens the door to people making and distributing photo-realistic images that may be deeply manipulative, offensive and even illegal, including celebrity deepfakes or images that violate copyrights.”

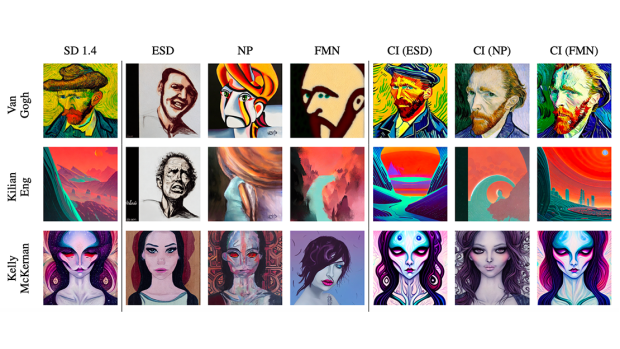

The researchers investigated seven of the latest concept erasure methods and demonstrated how they could bypass the filters using "concept inversion" attacks.

By learning special word embeddings and providing them as inputs, the researchers could successfully trigger Stable Diffusion to reconstruct the very concepts the sanitization aimed to remove, including hate symbols, trademarked objects, or celebrity likenesses. In fact the team's inversion attacks could reconstruct virtually any unsafe imagery the original Stable Diffusion model was capable of, despite claims the concepts were "erased."

The methods appear to be performing simple input filtering rather than truly removing unsafe knowledge representations. An adversary could potentially use these same concept inversion prompts on publicly released sanitized models to generate harmful or illegal content.

The findings raise concerns about prematurely deploying these sanitization approaches as a safety solution for powerful generative AI.

“Rendering text-to-image generative AI models incapable of creating bad content requires altering the model training itself, rather than relying on post hoc fixes,” said Hegde. “Our work shows that it is very unlikely that, say, Brad Pitt could ever successfully request that his appearance be "forgotten" by modern AI. Once these AI models reliably learn concepts, it is virtually impossible to fully excise any one concept from them.”

According to Hegde, the research also shows that proposed concept erasure methods must be evaluated not just on general samples, but explicitly against adversarial concept inversion attacks during the assessment process.

Collaborating with Hegde on the study were the paper’s first author, NYU Tandon PhD candidate Minh Pham; NYU Tandon PhD candidate Govin Mittal; NYU Tandon graduate fellow Kelly O. Marshall and NYU Tandon post doctoral researcher Niv Cohen.

The paper is the latest research that contributes to Hegde’s body of work focused on developing AI models to solve problems in areas like imaging, materials design, and transportation, and on identifying weaknesses in current models. In another recent study, Hegde and his collaborators revealed they developed an AI technique that can change a person's apparent age in images while maintaining their unique identifying features, a significant step forward from standard AI models that can make people look younger or older but fail to retain their individual biometric identifiers.

Circumventing Concept Erasure Methods For Text-To-Image Generative Models

Minh Pham, Kelly O. Marshall, Niv Cohen, Govind Mittal, Chinmay Hegde

Published: 16 Jan 2024. Conference paper at ICLR 2024

New AI model developed at NYU Tandon can alter apparent ages of facial images while retaining identifying features, a breakthrough in the field

NYU Tandon School of Engineering researchers developed a new artificial intelligence technique to change a person’s apparent age in images while maintaining their unique identifying features, a significant step forward from standard AI models that can make people look younger or older but fail to retain their individual biometric identifiers.

In a paper published in the proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Sudipta Banerjee, the paper’s first author and a research assistant professor in the Computer Science and Engineering (CSE) Department, and colleagues trained a type of generative AI model – a latent diffusion model – to “know” how to perform identity-retaining age transformation.

To do this, Banerjee – working with CSE PhD candidate Govind Mittal and PhD graduate Ameya Joshi, under the guidance of Chinmay Hegde, CSE associate professor and Nasir Memon, CSE professor – overcame a typical challenge in this type of work, namely assembling a large set of training data consisting of images that show individual people over many years.

Instead, the team trained the model with a small set of images of an individual, along with a separate set of images with captions indicating the age category of the person represented: child, teenager, young adult, middle-aged, elderly, or old. This set included images of celebrities captured throughout their lives.

The model learned the biometric characteristics that identified individuals from the first set. The age-captioned images taught the model the relationship between images and age. The trained model could then be used to simulate aging or de-aging by specifying a target age using a text prompt.

Researchers employed a method called "DreamBooth" for editing human face images by gradually modifying them using a combination of neural network components. The method involves adding and removing noise – random variations or disturbances – to images while considering the underlying data distribution.

The approach utilizes text prompts and class labels to guide the image generation process, focusing on maintaining identity-specific details and overall image quality. Various loss functions are employed to fine-tune the neural network model, and the method's effectiveness is demonstrated through experiments on generating human face images with age-related changes and contextual variations.

The researchers tested their method against other existing age-modification methods, by having 26 volunteers match the generated image with an actual image of that person, and with ArcFace, a facial recognition algorithm. They found their method outperformed other methods, with a decrease of up to 44% in the rate of incorrect rejections.